Are All ELP Tests Created Equal? And Why Are Some ELP Examiners Stricter Than Others?

ELP results vary by test centre. Examiner calibration could make ICAO descriptors consistent, fairer for candidates, and safer for aviation.

The Problem: A Lack of Standardisation in English Language Proficiency (ELP) testing

Standardisation in English Language Proficiency (ELP) testing is, for reasons that make no sense to me, pretty much non-existent. As a certified ELP examiner with a background as a Cambridge speaking examiner, I’ve seen what true standardisation looks like: a single central body that designs the rating scale, creates all the tests, trains every examiner, and runs annual certification sessions - worldwide. This approach ensures everyone is on the same page.

✈

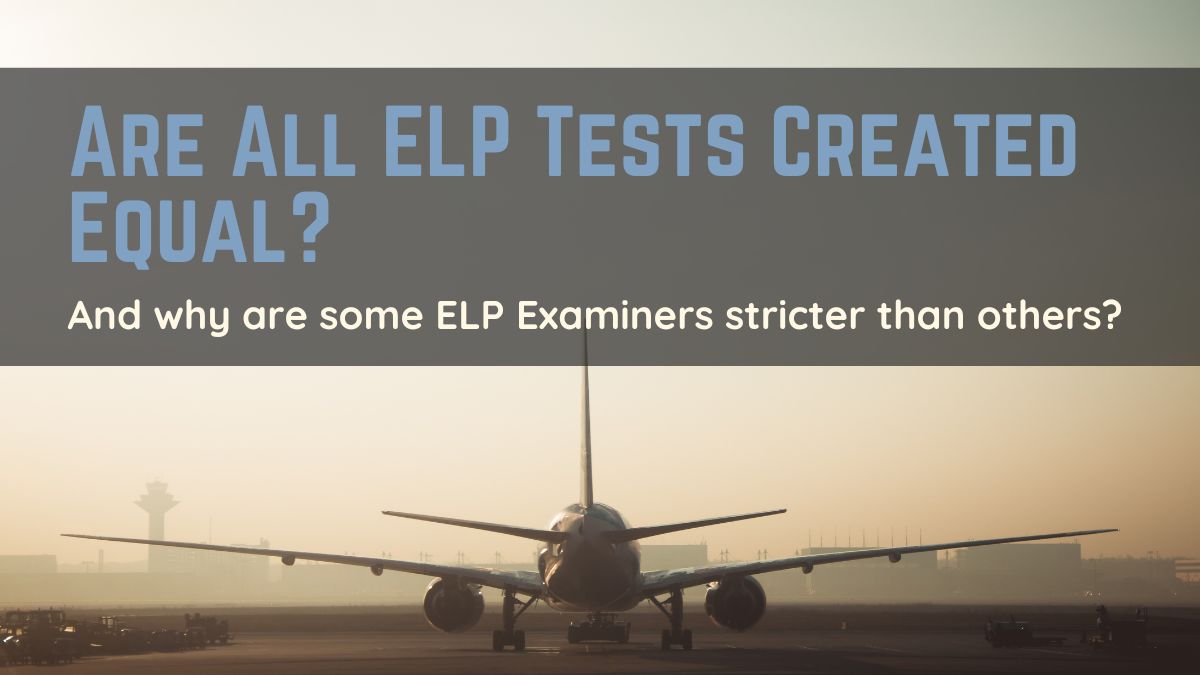

Now, contrast that with ELP testing in aviation. ICAO creates the Rating Scale with descriptors for each criteria (Pronunciation, Structure, Vocabulary, Fluency, Comprehension and Interaction) and each level (1 - 6), but each testing centre is left to interpret those descriptors as they see fit. There’s no central body ensuring everyone is applying the criteria in the same way, no global standardisation sessions, and no requirement for examiners to calibrate their scoring with colleagues elsewhere. The result? Some centres become known for being a bit lenient, others much stricter, and there’s a real lack of consistency in how candidates are assessed from one centre to another.

What True Calibration Looks Like: The Cambridge Model

Let me explain how the Cambridge process works in more detail. Once a year, all examiners worldwide must renew their certification. These sessions can be face-to-face or, more commonly now, on an online platform. The process begins with a video of a test taking place (created by Cambridge) featuring dummy candidates. Examiners are provided upfront with the official scores assigned by the Cambridge team, who have applied the rating scale descriptors exactly as intended. This first stage, “setting the standard,” lets us see precisely what Cambridge expects and why. Afterwards, there’s a feedback session to clear up any doubts.

✈

Next comes “applying the standard.” We watch two more dummy test videos, scoring the candidates ourselves for each category, without knowing in advance how Cambridge scored them. Once our marks are submitted, we compare them to Cambridge’s official scores to see how closely we align.

✈

The final stage is the test itself: we watch a fourth video, rate the candidate, and submit our scores, again, without knowing what Cambridge deems correct. Cambridge then lets us know whether we’ve passed or failed. If we pass, we’re certified for the next twelve months. If not, we get a second attempt; if we fail again, we’re not permitted to examine for that year and must wait until the following year to try again. The process is strictly individual - no discussion or group negotiation - making it a true test of each examiner’s understanding and consistency.

✈

This system isn’t totally foolproof - an examiner could theoretically pass the refresher and demonstrate that they can apply the standards as intended, but then apply a different set of standards when examining real candidates. But if a similar system existed in aviation, at least we could be sure that we are all able to interpret the ICAO rating criteria as ICAO intended, and are capable of calibrating ourselves to that standard - something which is currently lacking.

✈

The Real-World Consequence: Inconsistency and “Test Centre Shopping”

A case in point: I once examined a candidate who, in my view, was a solid level 4 in every area except pronunciation. His accent was so strong that, even after replaying the recording multiple times, I could barely understand what he was trying to say throughout significant portions of the test. I ultimately gave him a 3 for pronunciation, as the descriptor states: “Pronunciation, stress, rhythm and intonation are influenced by the first language or regional variation and frequently interfere with ease of understanding.” In this candidate’s case, his pronunciation genuinely did frequently interfere with my ability to understand him. And, as per the rules, a level 3 for pronunciation meant a level 3 overall - a fail.

Less than a month later, I learned that he’d taken the test at another centre elsewhere in the EU and had been awarded a level 5. The official descriptor for level 5 pronunciation reads: “Pronunciation, stress, rhythm and intonation, though influenced by the first language or regional variation, rarely interfere with ease of understanding.” RARELY INTERFERE. How could a candidate go from a 3 for pronunciation to a 5 in just a couple of weeks? Realistically, they can’t. The issue is that the other testing centre clearly had a very different interpretation of the words “rarely interfere” and “frequently interfere” compared to mine. So, who was correct? Well, if we had proper calibration of examiners, we’d know for certain. But I’ll stick my neck out and say, hand on heart, this candidate should not have been awarded a level 5 by anyone’s standards.

✈

This isn’t just an isolated story - these inconsistencies are well known in the industry. It leads to candidates going ‘test centre shopping’, looking for the easiest place to pass rather than the fairest. The consequence? Some end up with a higher English level on paper than they actually possess, which has serious implications for safety and undermines the credibility of the whole testing system. Strict centres get a reputation for being ‘too hard’, while lenient ones become magnets for those seeking an easy pass. None of this serves the purpose of ELP testing, which is to ensure safe and effective communication in aviation.

✈

A Simple Solution: Calibrating ELP Examiners

So, what’s stopping us from adopting a Cambridge-style approach to ELP rater training? How hard can it be? Imagine if, each year, a central authority produced four short videos of dummy ELP candidates: one to establish the standard, two for examiners to practise their scoring, and a final one to formally assess their calibration. All testing bodies could incorporate these videos into their annual refresher training, ensuring that every examiner is interpreting the descriptors in line with ICAO’s intended standard. Those who don’t score within an acceptable margin would need further training before being allowed to rate candidates again.

✈

I can but live in hope.

✈

The Bottom Line

For the sake of fairness, reliability, and trust in the system, ICAO language proficiency descriptors should mean the same thing to every examiner, everywhere. Don't you think?

Categories: : ICAO Rating Scale

Nikki Jane

Nikki Jane